1️⃣ Terraform + Ansible + Docker Swarm = 🔥

The code used in this blog can be found here.

Infrastructure as Code defines your infrastructure in some form of text (typically code).

You would execute this code to define,deploy,update or destroy your Infra.

It’s a concept where you shift your mindset to “treat all aspects of operations as software” 💻

Gone are the days where you would log into a cloud console and press buttons to build your resources … instead, these are created using code templates!

The value of IaC is that you have a versioned, easy-to-read source-of-truth. It doubles up as documentation - You don’t have to count down the days until that sysadmin returns from holidays 🏖️ to find out information about your infra, you can step past that bottleneck.

If your infra is defined in code, then developers can take ownership of automating their pipelines and kicking off their own deployments. 🎉

If this workflow is automated, deployments are faster and more reliable. Humans are prone to error… when tired we can miss a step or mistype a command, having this process automated means it will be more consistent and reliable. 🤖

So, let’s dive right into it… 🤿

Creating our infrastructure 📦⌗

We will use the IaC tool Terraform to provision our cloud resources.

⚠️ This blog uses AWS as its cloud provider!

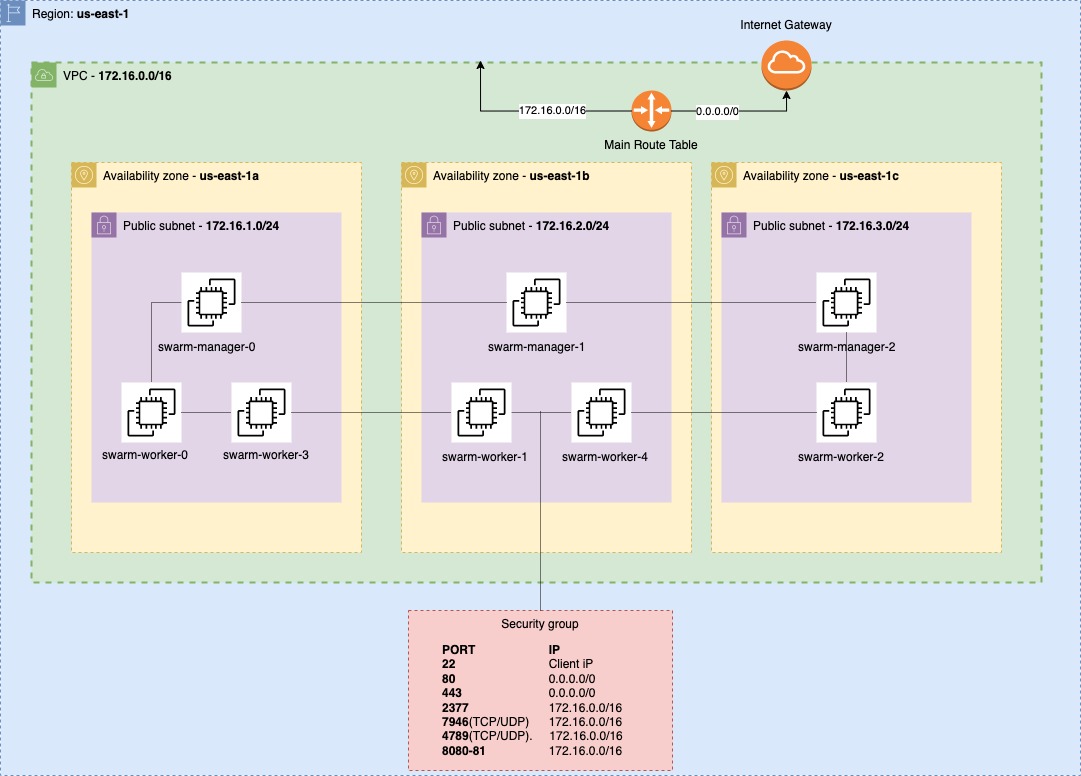

From 50,000 feet, our end goal will be to create of an environment that looks like 👇

Terraform will create a VPC with a specific CIDR block.

Within this VPC, there will be multiple subnets that will house X number of EC2 compute instances.

These machines will be provisioned across multiple Availability zones.

TCP/UDP traffic will be allowed (on several ports) between instances that exist within the VPC (This is a requirement for Docker swarm’s network).

Terraform will generate an inventory file which will be used by Ansible afterwards to install the required software needed for swarm.

It will also create a temporary private key that will allow the executor of the terraform script SSH access to each machine.

How Terraform does all this magic is beyond the scope of this blog, but in layman terms…

- We write some Terraform code (using

HCL) that describes the infrastructure we want.- Terraform converts this code into a plan… showing us exactly what changes it will make to reach the desired state that we defined in the code.

- Once we’re happy with the plan, terraform applies the changes (under the hood, it makes the necessary API calls to our ☁️ provider to create/modify resources)

- Terraform keeps track of the state of our infrastructure 🔑, so it knows what resources have been created and what changes have been made!

- Need to make changes to our Infra? No problem. We can easily update our code and repeat the ⬆️.

- Terraform will generate a new plan highlighting the changes and applies the updates to our infra (the state will be updated too!)

Terraform doesn’t force us to use a particular file structure.

If we really wanted to, we can define everything in a single file.

However, as you can imagine, terraform configs can get biggggg. So, it’s desirable that we split our infrastructure into logical groupings called modules.

For this blog, I decided to split this miniproject into three child modules:

network- All networking-related infrastructure, including the VPC, subnets and internet gatewaysecurity- All security related resources, including security groups / Network ACLs.compute- All compute resources - EC2 instances.

⚠️ NOTE: Every workspace has a root module. This is the directory where we will run terraform plan / terraform apply etc. Within this root module we will have the child modules (as described above).

Our directory layout will therefore look like:

So, let’s look at the child modules 👀

Networking 🕸️⌗

Diving into it:

This script creates a virtual private cloud (VPC), public subnets and an internet gateway. ☁️

It sets the CIDR block for the VPC, creates the subnets in specified availability zones, and associates them with a public route table. 🗺️

By using the

localsblock and an in-built terraform functioncidrsubnetwe create the subnet CIDR blocks programmatically!For e.g.

The route table contains a default route pointing to the internet gateway, allowing outbound internet access for instances in the public subnets. 🌐

I’m a big advocate of tagging each resource … so I’ve set tags everywhere for easier resource management!

Nice, we’ve got our networking infra setup. Where to next?

Security 👮⌗

Let’s look at the script that will create our security groups:

In a nutshell, this script will create a Security group with serveral ingress and egress rules.

There’s a few cool things going on in the above…

The data block at the top of the script is retrieving the IP address of the client (me!) making the request to https://ifconfig.io.

It stores this value in a local Terraform variable myip (appending /32 to represent a CIDR range of a single IP address).

The chomp function just removes any trailing whitespaces or newlines from the response body of the HTTP request.

The next resource created is the main player… the security group which will be attached to all EC2 instances in our VPC!

Blasting through it:

- Port 22 is required for SSH access from your local.

- Ports 80 and 443 will be used for

HTTP/saccess to a proxy (NGINX resource to be exact) - The rest of the ports is used by Docker Swarm for internal communication.

- 2377 is for cluster management communications

TCPandUDPports 7946 and 4789 are used for communication among nodes and overlay network traffic respectively.- 8081-8082 are ports that will be exposed by Docker containers.

- The last entry allows communication from the cluster to the outside 🌎 without any restriction.

Ok…

- [✔️] Networking

- [✔️] Security

Let’s now write a script which will create some EC2s!

Compute 🖥️⌗

Looking at:

This script is your shop window. 💸

It will create the computing infrastructure required for a Docker swarm cluster to run in the cloud (AWS).

We firstly go and grab the AMI ID for Amazon linux 2.

Since this project is for development purposes only, we will create a temporary RSA private key which will grant us SSH access to the swarm servers.

This key is also used to create an AWS Key Pair.

We’ve defined two resources in the above for creating manager and worker nodes in AWS (aws_instance.swarm-manager & aws_instance.swarm-worker).

These resources take several variables (count,instance_type,vpc_security_group_ids) to spin up the required EC2 instances.

The key-pair that we created earlier is used for governing ssh access.

User data is also passed to each instance to set the hostname dynamically.

Finally, a local file is created containing an Ansible inventory which will list the IPs of the swarm manager[s] / swarm worker[s].

TL;DR All being well, this script should create several manager / worker nodes 🙏

Executing our terraform scripts⌗

So, now that we’ve created the relevant child modules… how do we go about executing each script?

Recall terraform apply will attempt to create the resources specified in a .tf file… so do we cd into the below directories and execute terraform apply in each of them?

/modules/network/main.tf/modules/security/main.tf/modules/compute/main.tf

Simple answer: No.

Recall, inside the root module, we create a main.tf file.

This serves as the primary entry point for provisioning infrastructure in Terraform.

This root module will also house:

outputs.tf: declarations of all output values.inputs.tf: declarations of all input values.

Looking at main.tf in the root module:

We can see that each of the modules we discussed above network,security and compute are declared.

Running terraform apply inside the root module will execute this script and in-turn:

- Create all networking infrastructure

- Create the required security group

- Create the EC2 instances which will form the manager & worker nodes of the swarm cluster!

- Modules depend on each other.

- The network will be created first, followed by the security group and then lastly the creation of EC2 instances.

- The provider block specifies that

AWSshould be used with a region specified by thevar.regionvariable.

- Modules depend on each other.

Ok, I think that’s enough for this blog post 😴

We’re set up nicely to configure these freshly created terraform resources using Ansible! This will be the focus of the second blog in this series.

We might even deploy a stack to our swarm cluster that illustrates 🔵🟢 deployments 👀