2️⃣ Terraform + Ansible + Docker Swarm = 🔥

The code used in this blog can be found here.

We’ve a list of hosts provisioned by terraform.

We need a list of things to happen on these hosts.

We need to ensure that those tasks happen.

🥁 (drum roll) …

Enter Ansible. Shell scripts on steroids. Configuration as code.

To keep this brief, I want to install a litany of packages on each machine (🐳 + 🐍 etc), I want to create a user profile for moi, I want to initiate a Docker Swarm cluster, I want to deploy a stack within Swarm.

I could create a bash script to run all these actions for me… 🤔

What happens if I rerun the script? I’ll probably get an error saying that the system is already in the desired state.

I could put a lot of error checking into that script to make sure there’s no unintended consequences if I run it again.

I could also get the script to report if it had to make a change or if the change had already been executed.

But, if I followed through with all these steps, then I’ve basically went and created my own version of Ansible!

Ansible ⭐⌗

Ansible is a configuration management tool

It helps manage and automate the configuration of systems, applications and infrastructure.

It uses YAML to write clear and concise playbooks that define a set of automation tasks.

Most of the modules built into Ansible are idempotent i.e. no matter how many times we run the task, we will still get the same result.

IMO, Ansible is still fundamental in DevOps engineering. I find it incredibly useful and powerful. If you have to get info from 100 machines concurrently, or copy data from A to B-Z, Ansible makes this a breeze 🔥

Let’s run an ad-hoc ping command to ensure our terraform provisioned servers are up:

❯ ansible all -m ping -i inventory.ini

swarm-manager-0 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

...

swarm-worker-1 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

swarm-worker-2 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

Looking good 😎

Now, we can expand on this.

Let’s create a set of ansible roles that will configure all these servers for us. Configure = update packages, install a newer version of python, install the Docker engine, initiate a Swarm, tag each node in the Swarm with some relevant label and finally deploy a stack to the cluster!

This is what our directory looks like 👇

❯ tree . -a -L 2

.

├── ansible.cfg

├── check_master.yml

├── delete_swarm.yml

├── deploy_swarm.yml

├── destroy_infra.yml

├── group_vars

│ └── all.yml

├── init_terraform.yml

├── inventory.ini

└── roles

├── add-swarm-labels

├── create-user

├── deploy-stack

├── destroy-infra

├── docker-installation

├── init-terraform

├── leave-swarm

├── swarm-init

├── swarm-manager

└── swarm-worker

As you can see, we have 10 roles. I’ll not be explaining every role, much to your delight!

Please refer to the repo for a better understanding of what they actually do.

They should be named in such a way that it should be self intuitive.

For e.g. let’s look at swarm-init:

---

- name: Ensure docker deamon is running

service:

name: docker

state: restarted

- name: Initialize Docker Swarm

docker_swarm:

state: present

run_once: true #If i've multiple managers, run on first

tags: swarm

- name: Get the Manager join-token

shell: docker swarm join-token --quiet manager

register: manager_token

tags: swarm

- name: Get the worker join-token

shell: docker swarm join-token --quiet worker

register: worker_token

tags: swarm

In summary, this playbook performs four tasks:

- It ensures that the Docker daemon is running

- It Initialises Docker Swarm if it has not already been initialised.

- It retrieves the join-token for the manager nodes.

- It retrieves the join-token for the worker nodes.

- These tokens are stored in the variables

manager_token/worker_tokenrespectively using theregisterkeyword.

- These tokens are stored in the variables

So we’ve 10 roles we want to run (give or take).

Let’s create a script deploy_swarm.yml in the root directory which will execute this for us:

---

- name: install packages

hosts: docker-nodes

become: yes

roles:

- docker-installation

- name: create a new user account

hosts: docker-nodes

become: yes

vars:

users:

- "{{ dev_user }}"

roles:

- create-user

- name: initialize docker swarm

hosts: swarm-manager-0

become: yes

vars:

ansible_python_interpreter: /usr/bin/python3.8

roles:

- swarm-init

- name: Add managers to the swarm

hosts: managers

become: yes

vars:

ansible_python_interpreter: /usr/bin/python3.8

roles:

- swarm-manager

- name: add workers to the swarm

hosts: workers

become: yes

vars:

ansible_python_interpreter: /usr/bin/python3.8

roles:

- swarm-worker

- name: Add labels to each node in the swarm

hosts: swarm-manager-0

become: yes

roles:

- add-swarm-labels

- name: Deploy docker stack for blue-green deployment

hosts: swarm-manager-0

become: yes

vars:

ansible_python_interpreter: /usr/bin/python3.8

roles:

- deploy-stack

Speed round ⏩

In summary, this playbook automates the deployment and configuration of a Docker Swarm by installing required packages, creating new user accounts, initializing the Swarm, adding nodes as managers and workers, adding labels to nodes, and deploying a Docker stack to illustrate a 🔵🟢 deployment.

What did we deploy there? 🐳⌗

Our last task in the above playbook involved deploying some Docker services to the cluster.

If you cd into the terraform directory, you should be able to run some remote exec commands to inspect what services were created:

$ alias terraform_ssh="ssh -i test.pem $(terraform output swarm_manager_public_ip | tr -d '"')"

$ terraform_ssh docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

iuib974nlhnd swarm_blue-app replicated 4/4 irishbandit/devblog:flask-v2 *:30001->8080/tcp

3j0zjxsaauhp swarm_green-app replicated 4/4 irishbandit/devblog:flask-v2 *:30000->8080/tcp

v1dw1ndduez9 swarm_nginx replicated 1/1 nginx:stable-alpine *:80->80/tcp

Wow, let’s take a step back… How did we SSH into one of the manager nodes?

So, to jump onto a server that Terraform created for us, you might’ve been inclined to copy the IP directly from the Ansible inventory.ini file (created by terraform) or by going to the AWS EC2 Dashboard and taking the IP from there.

You can do this … but, we don’t need to.

Terraform has a command that can be used to retrieve any information we defined as an output.

Therefore, terraform output swarm_manager_public_ip returns the public IP of the first Swarm manager!

We used this output command to construct a custom SSH command which we wrapped in the alias terraform_ssh.

We can leverage this command to remotely execute commands on the Docker swarm cluster, à la:

> #Check which nodes have the label deployment=blue

> terraform_ssh docker node ls --filter node.label=deployment=blue

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

aeg18v5j5cqd6yerr9pn23m0e swarm-worker-2 Ready Active 20.10.17

>

> #Check which nodes have the label deployment=green

> terraform_ssh docker node ls --filter node.label=deployment=green

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

wjeqr6oeh5gga554ujq0ot6cm swarm-worker-0 Ready Active 20.10.17

t7sc5b12a6hwnhbccuq4vkmg8 swarm-worker-1 Ready Active 20.10.17

>

> #Check which nodes have the label type=worker

> terraform_ssh docker node ls --filter node.label=type=worker

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

wjeqr6oeh5gga554ujq0ot6cm swarm-worker-0 Ready Active 20.10.17

t7sc5b12a6hwnhbccuq4vkmg8 swarm-worker-1 Ready Active 20.10.17

aeg18v5j5cqd6yerr9pn23m0e swarm-worker-2 Ready Active 20.10.17

>

> #Check which nodes have the label type=manager

> terraform_ssh docker node ls --filter node.label=type=manager

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

zc6linihccs2tvq4c4uiw7ddd * swarm-manager-0 Ready Active Leader 20.10.17

yktqelcnbzgmwdub3oea7vi0o swarm-manager-1 Ready Active Reachable 20.10.17

9a6iap15zv6oquw7gkfb33b9n swarm-manager-2 Ready Active Reachable 20.10.17

>

> #Check what services are running on the cluster

> terraform_ssh docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

iuib974nlhnd swarm_blue-app replicated 4/4 irishbandit/devblog:flask-v2 *:30001->8080/tcp

3j0zjxsaauhp swarm_green-app replicated 4/4 irishbandit/devblog:flask-v2 *:30000->8080/tcp

v1dw1ndduez9 swarm_nginx replicated 1/1 nginx:stable-alpine *:80->80/tcp

Ok, we can see three services running:

swarm_green-appswarm_blue-appswarm_nginx

In a nutshell, we want to hit an

NGINXcontainer that has exposed a port80.This process will act as a reverse-proxy and will forward our request to an appropiate backend application (blue-app or green-app).

We control what backend application NGINX will forward the request to via an ENV variable -

DEPLOYMENT(shown later)The backend app is written in Python and uses the

Flaskweb framework to create a web application.If a GET/POST request is made to a flask endpoint, it will return a welcome message including the

hostnameof the server and the value of the ENV VARDEPLOYMENT.

The prefix swarm_ is the name of the stack that Ansible asked Docker Swarm to deploy.

It uses a compose file to create the stack in the cluster:

version: '3.8'

services:

nginx:

image: nginx:stable-alpine

command: sh -c "envsubst < /etc/nginx/conf.d/default.template > /etc/nginx/conf.d/default.conf && nginx -g 'daemon off;'"

environment:

- ACTIVE_BACKEND=green-app

- BACKUP_BACKEND=blue-app

ports:

- 80:80

volumes:

- /opt/default.template:/etc/nginx/conf.d/default.template

deploy:

replicas: 3

placement:

constraints:

- node.labels.type == manager

update_config:

parallelism: 1

delay: 0s

green-app:

image: irishbandit/devblog:flask-v2

hostname: "green-app-{{.Node.Hostname}}-{{.Task.ID}}"

ports:

- 8080

environment:

- DEPLOYMENT=GREEN

command: python app.py

deploy:

replicas: 4

placement:

constraints:

- node.labels.deployment == green

- node.labels.type != manager

blue-app:

image: irishbandit/devblog:flask-v2

hostname: "blue-app-{{.Node.Hostname}}-{{.Task.ID}}"

ports:

- 8080

environment:

- DEPLOYMENT=BLUE

command: python app.py

deploy:

replicas: 4

placement:

constraints:

- node.labels.deployment == blue

- node.labels.type != manager

⚠️ A few important things to note about the above.

-

The configuration for NGINX is generated by a template(

default.template) using theenvsubstcommand.- The values of the environment variables

ACTIVE_BACKENDandBACKUP_BACKENDare substituted into the template to generate the final configuration file:/etc/nginx/conf.d/default.conf. - This is how we will control traffic to blue-green applications.

- We set parallelism to 1 which means 1 container will be updated at a time!

- The values of the environment variables

-

We defined how many replicas of a service we want to run.

- The NGINX, 🔵🟢 apps have multiple replicas running.

-

We’ve used placement contraints to ensure services run on particular node[s].

- Swarm will try and place containers with the goal of providing maximum resiliency. So, if you had five replicas of one service, it will try and place these containers on five different machines.

- Sometimes, however, you need to control where a container is run. In this case, it’s logical to separate green and blue applications.

- We ran an ansible task that runs a shell script to tag nodes with a specific label (

green/blue/worker/manager) - We control where services are placed by filtering on these tags/labels.

Controlling traffic between Blue-Green Applications 🏎️⌗

NGINX diverts the traffic to the backend containers based on what the environment variable ACTIVE_BACKEND is set to:

services:

nginx:

...

command: sh -c "envsubst < /etc/nginx/conf.d/default.template > /etc/nginx/conf.d/default.conf && nginx -g 'daemon off;'"

environment:

- ACTIVE_BACKEND=green-app

- BACKUP_BACKEND=blue-app

The default.template which NGINX uses to generate its config, uses these ENV vars to create upstream paths (recall the command envsubst is used for variable substitution):

server {

listen 80;

location / {

proxy_pass http://swarm-backend;

}

}

upstream swarm-backend {

server ${ACTIVE_BACKEND}:8080;

server ${BACKUP_BACKEND}:8080 backup;

}

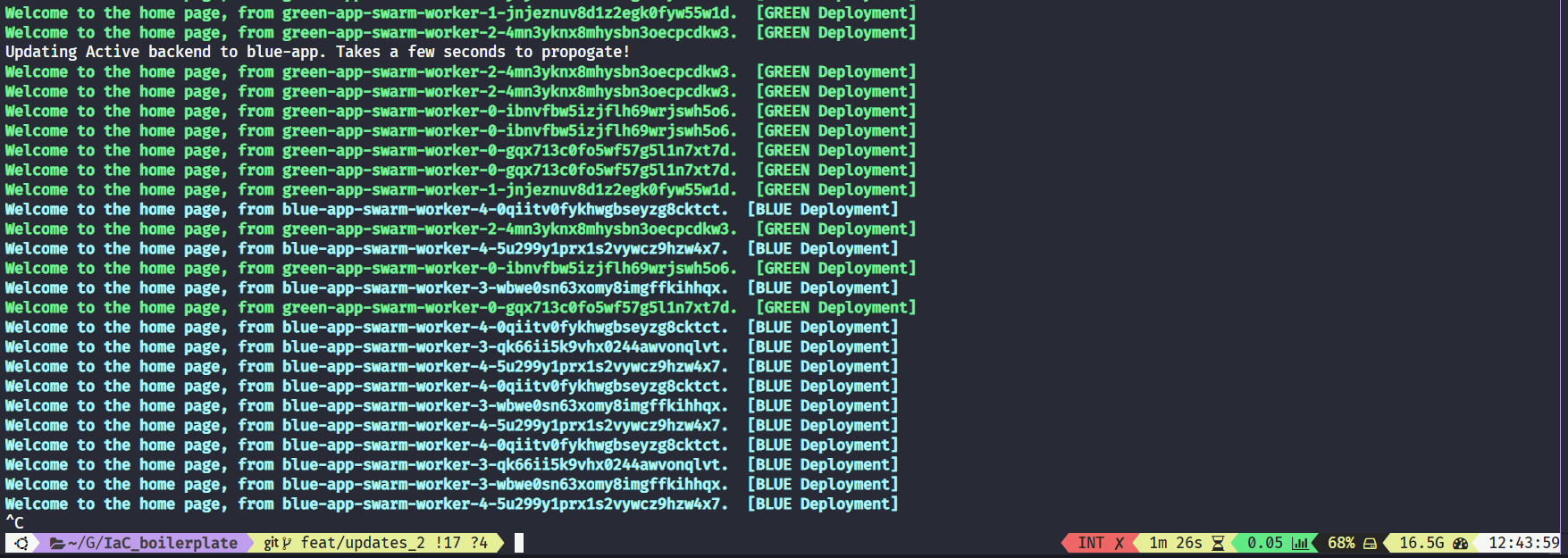

Therefore, in our current state, all traffic will be diverted to the green-applications 🟢

We can confirm this by issuing a HTTP GET request against <manager ip>/home.

# Extract the IP of the swarm manager 0 using terraform outputs

PROXY_IP=$(terraform output swarm_manager_public_ip | tr -d '"')

for i in {1..3};do

curl -s http://$PROXY_IP/home

done

Welcome to the home page, from green-app-swarm-worker-0-ibnvfbw5izjflh69wrjswh5o6. [GREEN Deployment]

Welcome to the home page, from green-app-swarm-worker-2-4mn3yknx8mhysbn3oecpcdkw3. [GREEN Deployment]

Welcome to the home page, from green-app-swarm-worker-1-jnjeznuv8d1z2egk0fyw55w1d. [GREEN Deployment]

We can change the value of ACTIVE_BACKEND by updating the nginx service:

PROXY_IP=$(terraform output swarm_manager_public_ip | tr -d '"')

ssh -i test.pem $PROXY_IP docker service update --env-add ACTIVE_BACKEND=blue-app --env-add BACKUP_BACKEND=green-app swarm_nginx

⚠️ Note: If you recall our docker-stack.yml file, we set parallelism to 1. This dictates how many containers are updated at a time. Therefore, there will be some overlap between green/blue apps when the NGINX service is updated!

If we now go and hit the endpoint again, we should see return messages from our blue applications:

Welcome to the home page, from blue-app-swarm-worker-4-5u299y1prx1s2vywcz9hzw4x7. [BLUE Deployment]

Welcome to the home page, from blue-app-swarm-worker-4-0qiitv0fykhwgbseyzg8cktct. [BLUE Deployment]

Welcome to the home page, from blue-app-swarm-worker-3-qk66ii5k9vhx0244awvonqlvt. [BLUE Deployment]

All of the above behaviour can be simulated and observed by running the switch_traffic.sh bash script:

#!/bin/bash

# ANSI Color codes

BLUE='\033[1;36m'

GREEN='\033[1;32m'

NC='\033[0m' # No Color

# cd into terraform directory

TERRAFORM_DIR=$(pwd)/terraform

cd $TERRAFORM_DIR

# Extract the IP of the swarm manager 0 using terraform outputs

PROXY_IP=$(terraform output swarm_manager_public_ip | tr -d '"')

# Initialize the variables for the backends

ACTIVE="green"

BACKUP="blue"

# Initialize the variable to track the seconds

min=0

# While loop that issues a GET request at port 80 of swarm manager

while true

do

output=$(curl -s http://$PROXY_IP/home)

if echo "$output" | grep -q "GREEN"; then

echo -e "${GREEN}$output${NC}"

else

echo -e "${BLUE}$output${NC}"

fi

# Increment the minute counter

min=$((min+1))

# Switch active backends between green / blue apps every 45s

if [ $((min % 45)) -eq 0 ]; then

if [ "$ACTIVE" = "green" ]; then

ACTIVE="blue"

else

ACTIVE="green"

fi

echo -e "${YELLOW}Updating Active backend to ${ACTIVE}-app. Takes a few seconds to propogate!${NC}"

ssh -i test.pem $PROXY_IP docker service update --env-add ACTIVE_BACKEND=${ACTIVE}-app --env-add BACKUP_BACKEND=${BACKUP}-app swarm_nginx &

fi

# wait for 1 second before hitting the endpoint again

sleep 1

This will update active backends every 45s:

Finishing up⌗

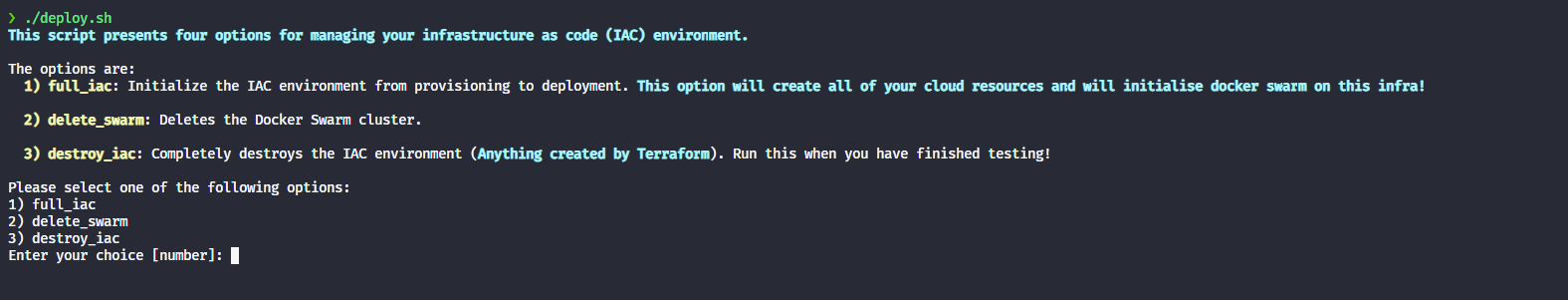

I’ve lost count of how many commands we ran over the course of these last 2 blogs 🤷

We init’d Terraform, provisioned infra, configured servers via Ansible, initiated a Docker Swarm cluster … the list goes on.

So, I’ve packaged everything up into one master script called deploy.sh.

You can provision and deploy all of what we covered with one command 🚀.

Likewise, when you’re finished … just run the script again to destroy everything!

Pass:

1to create and deploy everything3to destroy everything

We’ve just provisioned and deployed a full IaC environment highlighting a green-blue deployment 🥳

If this has peaked your interest, head over to the repo and play around!