Create a discovery service using kdb+ 🔍

First things first, the code for this blog can be found here.

A discovery service can be a very nice piece of kit in your infrastructure.

Having some central application that maintains information about every service in your application estate can be extremely beneficial to developers and the business.

Interacting with this service means it’s easy to obtain location details of other services, their health state, their kdb+ version etc.

So without further ado, this blog will build a in-house discovery service in

kdb+using an asynchronous heartbeat model

The plan⌗

All clients will load in some client library (.client namespace) on start-up.

Each client calls a function .master.storeHb to be remotely executed on a master process asynchronously.

This function will upsert a record to a table .master.discovery.

This table will keep track of all heartbeats and services. It will serve as the golden source of truth.

The .master.discovery table contains an active column. If the service is regularly publishing updates, then this service is marked as active 🟢 in .master.discovery.

If the master process doesn’t receive an update from a service within some set interval, it will be marked as inactive 🔴.

The port open/port closed (.z.po / .z.pc) event handlers will be overwritten with a connect/disconnect function that will update some internal tables upon execution.

We will also create some HTML/js dashboard that will interact with the discovery service via websockets. It will make it easier to eyeball which processes are in our application estate and their health 📊.

The code⌗

I’m not going to bore you and deep-dive into the logic, instead I’ll extract snippets which I deem important.

The code within the repo should be adequately commented and can be easily followed by walking through the demo explained in the README.

master.storeHB:

-

This function is executed on the master discovery service.

-

It is called (asynchronously) by each client who has elected to publish heartbeats.

-

It upserts a payload to the table

.master.discovery. -

.master.discoverylooks like:

☝️ The process name in the above table is set to be an arbitrary value in this example… this would normally be set to be an actual service for .e.g rdb, rts and hdb.

.client.publish:

-

This is the publish function executed on a timer by each client.

-

It asynchronously executes the function

.master.storeHbwith a payload of the form:

- It increments the heartbeat counter by 1 each time.

.client.connect:

-

This is the connect function executed by each client.

-

It will check for the existance of a handle defined by

.cfg.discovery.handle. If this exists, it will attempt to connect to the discovery master server. -

The handle to the discovery service is stored in the variable

.discovery.hdl. If the cliuent cannot connect to the discovery service (for e.g. if the master server is down),.discovery.hdlwill be set as a null0Ni. -

Note:

.cfg.discovery.handlegets defined in the init code during startup.

.client.run:

- This function is executed as a cron job on the client.

-

It will first check that the handle to the discovery master server is valid. If the handle is null, it executes

client.connect[]to retry connecting to discovery. -

If the handle is valid and

.client.sendHBis set to true (1b), it will execute.client.publish[]to send heartbeats to the discovery service.

A frontend dashboard 💻⌗

Although, it’s probably preferable to query for service details over a handle … why not create a fancy dashboard which will do all this for us?

Clicking a few buttons 🔲 on this dashboard will allow us to subscribe to all/or a set of services and continually receive updates for these processes!

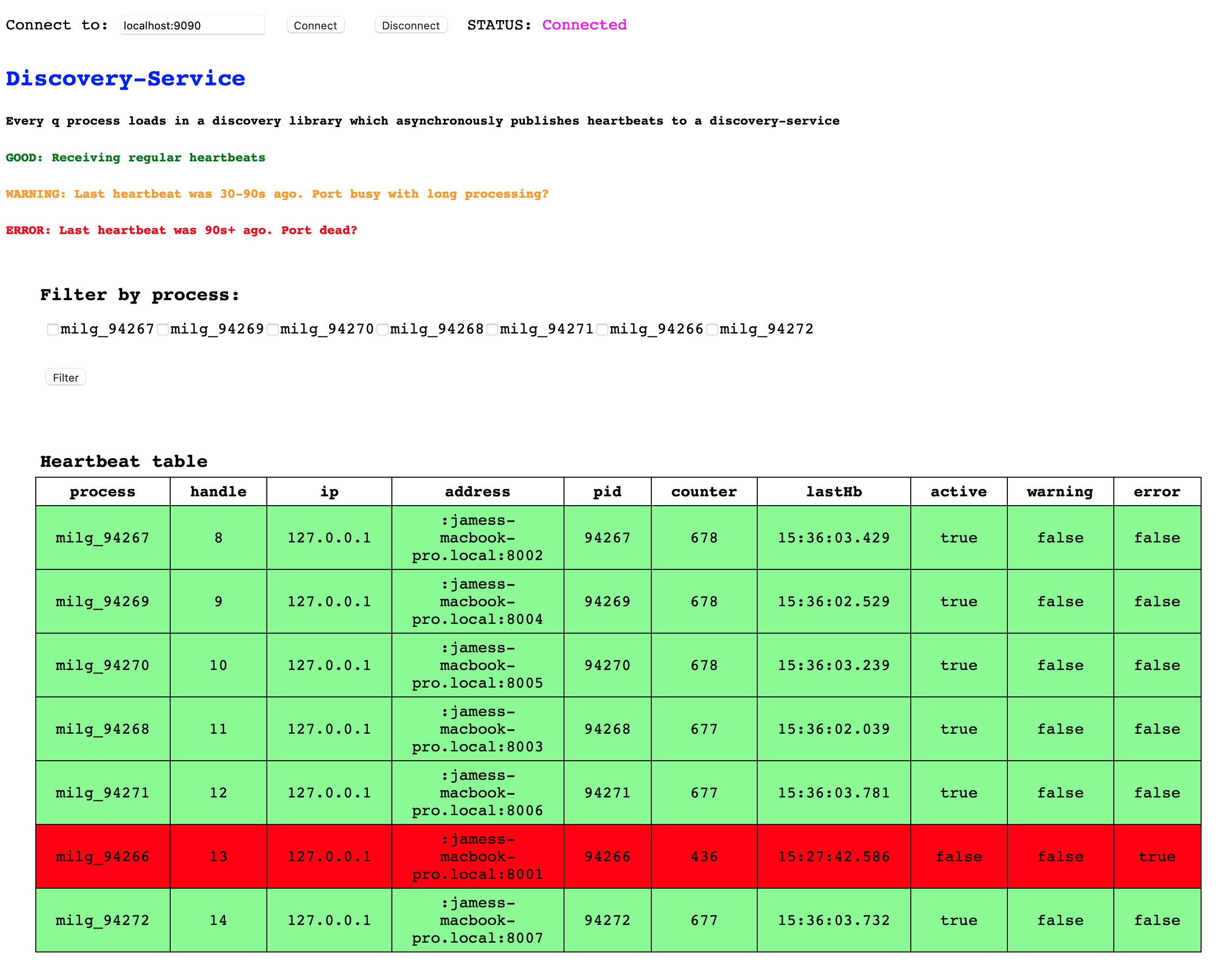

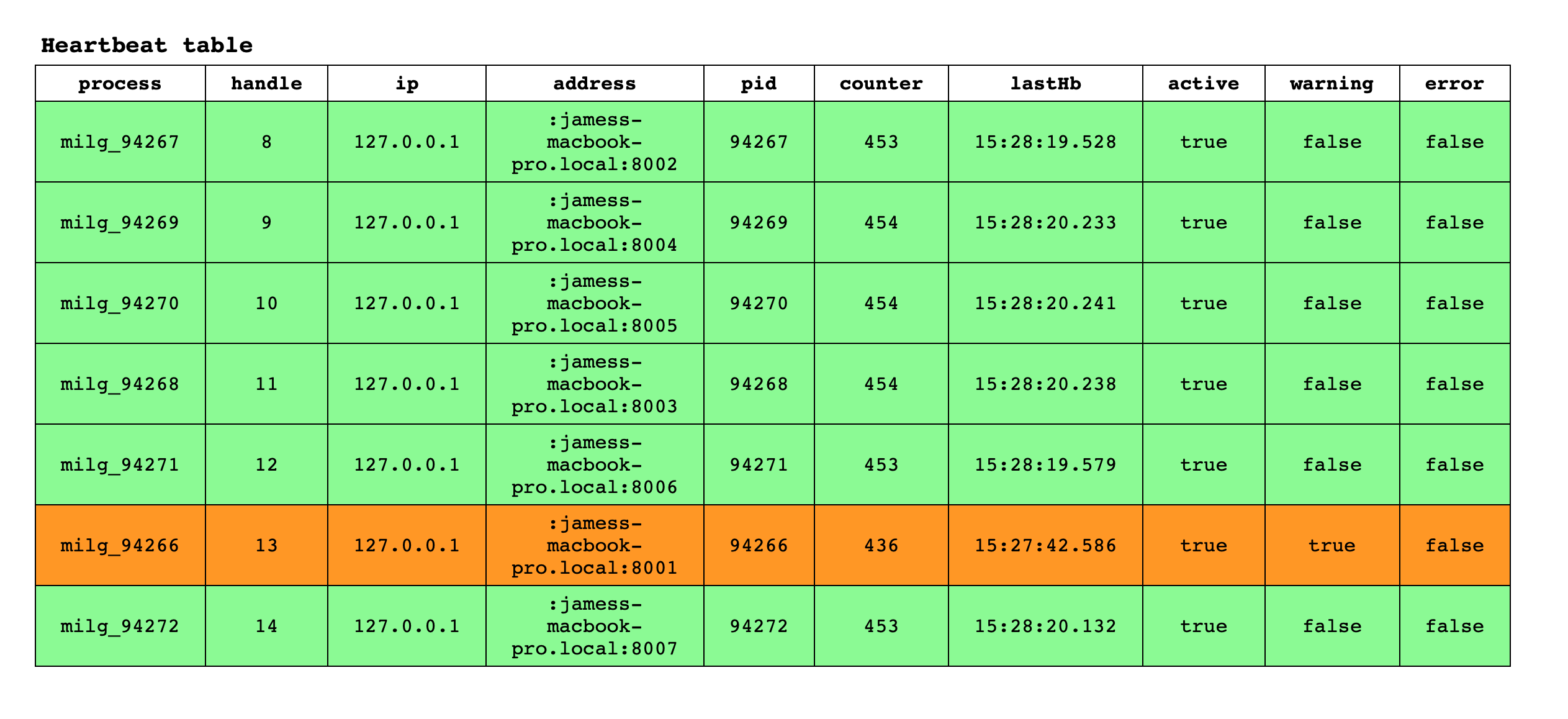

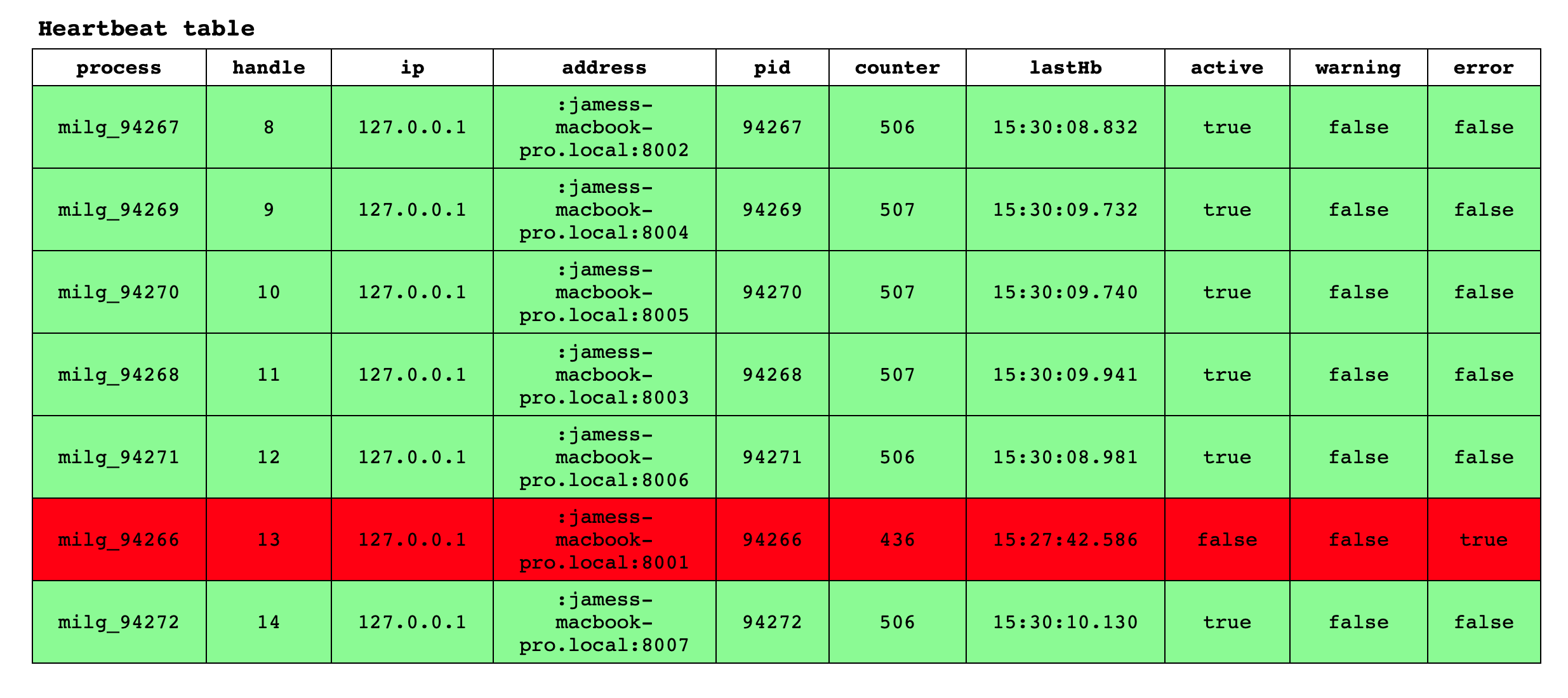

This dashboard will connect to the discovery service and will display the table .master.discovery, as shown below:

The discovery service will publish updates to this dashboard via .master.pub which is called inside .master.run (executed as a cron job):

-

If a service hasn’t received an update in the last 30 seconds, its row will change to 🟧 in the dashboard:

-

If a service hasn’t received an update in the last 90 seconds, it will change to 🟥 !

Bingo, we’ve created a simple in-house discovery service

Going forward, we can create some stored procedures and lock down what can be executed and who can see what within the discovery service.

Now, I’m not saying this is a replacement for

Consulorprometheusper say, but if your budget is tight, why not create an in-house service?